Why we don't want just hardcore programmers anymore

Today's software developer can't just have an interest in the machine, like what fascinated me when I grew up programming. He or she must be interested in understanding and solving actual problems for people and businesses, and be able to join the dots back to the underlying technology.

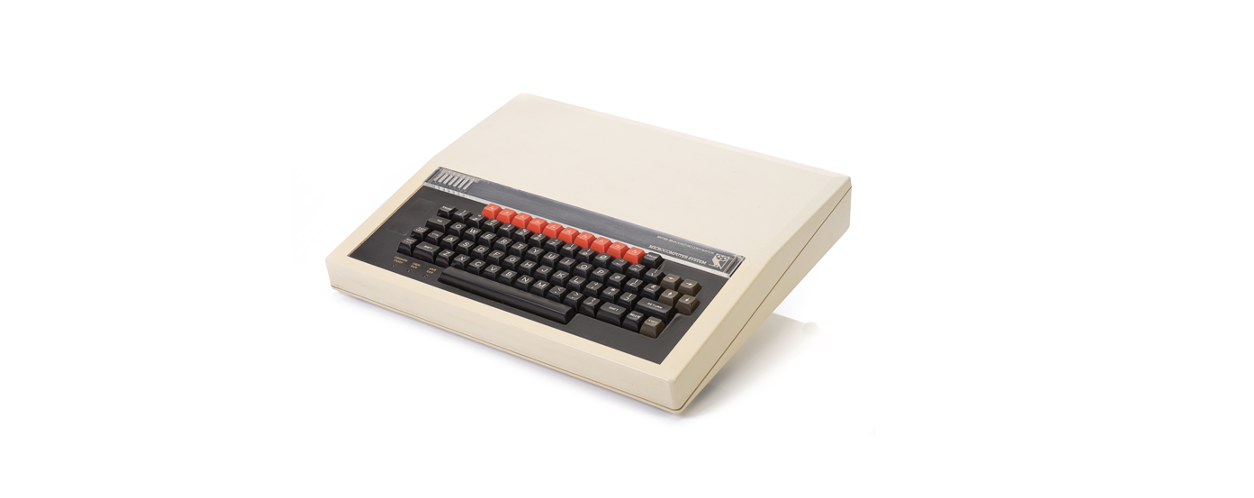

I was a little kid, a few years old, when 8-bit "home computers" started to make somewhat common appearances in wealthier children's homes. A home computer was expensive: A decent small device used for playing games and basic programming, with a cassette tape for loading and saving code and a TV to connect it to, together would probably cost £400 or so, requiring a typical month's pre-tax salary to purchase. That was about 20% of the price of a brand new small Ford car. The computer, say a BBC Model B, had a 2MHz 6502 processor and 32KB of memory. A cut-down version of that machine was what I taught myself to program on, in BASIC or assembler. More powerful computers existed, such as those made by PRIME, but they had directly connected terminals and there were only a few in the country, often requiring travelling a long way and spending huge sums of money to access one by the hour.

In those days the rate of innovation of the core technology was astonishing. Processors went from 8-bit CPUs to 16-bit, 32-bit and ultimately 64-bit. In 1985, the first 80386 came out, a 32-bit computer for home use, followed by Sun's SPARC and the first ARMs. From the early 80s to the early 90s, a home computer CPU had gone from 2MHz to 66MHz, corresponding to the clock speeds doubling every two years, along with increasing word lengths and faster memory. Caches were only starting to become a thing.

The same massive acceleration happened in storage devices and density and speeds as well. From cassette tapes and ROM cartridges through to different forms of floppy drives, early Winchester hard drives and CD ROMs. It even happened in graphics: my first computer could render 4 colours but we could only afford a black-and-white TV to run it on. Later models had 16 colours, then 256 colours (which, with dithering, would allow impressive looking pictures to come out). Soon we had displays capable of millions of colours at higher resolution suitable for rendering photos.

Network connectivity went the same way. The first modem I played with, to connect to bulletin boards, allowed sharing of posts and download/upload of files at 9600 baud, or just under 1KB/second. Modems got faster, the Internet came along and before we knew it we were browsing the web, sharing and purchasing things, and enjoying social media - brilliant applications of new technology.

These massively increasing capabilities over 20 years allowed whole new classes of things to be done by computers that had never been done before: Graphical User Interfaces with a mouse (before that it was just keyboards and terminals). Desktop Publishing and driving laser printers, 3D games like Doom and Quake and Elite, and flight simulators, optical character recognition for scanning documents, interactive music composition, photo editing, vector graphics and art on the computer. Running multiple apps at the same time and cutting & pasting between them. Playing and editing videos. Computers went from being something for primitive games and programming, to generally useful, productivity-enhancing tools that could be embraced by non-technical people. Every month there was some sort of new application or hardware innovation that accomplished things never seen before. This was the age when computers went from something special to something for everyone.

For programmers this was magic: Software just automatically got faster and faster without having to do anything. Multiple cores weren't a thing. 10 years later, in the early 90s, CPUs ran somewhere between 1.3GHz and 2GHz as a result of this continual increase in performance. Programming was so exciting: It was just like being a racing driver that got a car that went twice as fast as the previous one and with new features and interior innovations every two years, and one could occupy oneself solely with writing and tuning code to take advantage of the new capabilities.

Around 2008-2010, the programming fun started to slow down because most apps for desktop computers had been developed and they just got incrementally better. LCD screens were commonplace and had replaced CRTs. Programming got harder because clock speeds stopped going up and we added more cores instead, requiring the programmers to write code that could parallelise. I wrote my first multithreading module in the late 90s in anticipation of this trend; code running on top of the library would occasionally die and crash for unknown reasons and it was incredibly hard to debug race conditions. As all developers know, these are problems that still exist for multithreaded software written by me and everyone else today. VMWare was created to solve this problem of machines being underutilised by insufficiently multithreaded software: Run multiple "virtual" computers on one computer to drive more CPU usage.

The next big innovations were of course accessible Wifi, mobile data (2G GPRS onwards) and the iPhone. In a previous company, Liberate Technologies, we had developed an incredibly capable embedded browser very similar to WebKit/Chromium/Safari and were interested to port it to run on the Blackberry, but management decided that was daft and it should belong on a TV instead. That would have been an interesting bit of history if the decision went the other way! Cloud computing changed the consumption model to be more like the mainframes of a long time ago, but with cunningly worded names like "public cloud" (there's nothing public about it; compare and contrast with "public toilet."). The technology in cloud computing was mostly the same underlying stuff, but deployed, managed and consumed in a different way and at a much larger scale. All these trends point to real innovation happening farther and farther up the stack.

Nowadays, interest and innovation in the infrastructure underlying the services we consume has largely waned. There's no more life-changing stuff happening to processors, networks, storage or even graphics (even my laptop can drive three 6K monitors plus one 4K monitor plus the built-in Retina display all at the same time, which is just insane). All these things get better, but incrementally and without massive whole new classes of technology. New programming languages come and go but don't allow software to do things that are radically different as a result. Layers upon layers of frameworks and interpreted languages get built to make things easier to develop but also less computationally efficient as a result. Algorithmic problems that used to require serious cunning to solve can now be accomplished with brute force instead.

Where innovation is now happening is around the services delivered by the technology and what it does for the consumer or business. Applications continue to subsume more and more processes that would have required human interaction: Booking appointments, ordering food in restaurants, training and monitoring sales staff, outlining and refactoring code, whatnot. Few truly new classes of technical thing are coming along that seem impressive, with perhaps the exception of ChatGPT: Whereas most AI around faster pattern recognition (recognising things in photos and videos, detecting patterns) and content generation has been based on custom acceleration of the same fundamental algorithms, ChatGPT feels different. It'll be a new class of "AI assistant" that everyone has available next to them to mine knowledge and present it in a useful manner. At Yellowbrick, we've challenged ChatGPT with knowledge sourcing, answering engineering interview questions, writing blog posts, summarising catchy headings and decorrelating SQL — and it performs like a champ. It probably did a better job coding and refactoring fundamental algorithms than 60%+ of engineers I've interviewed in my career and did a better job creating headlines and summaries than many product marketers we've interviewed.

This today's software developer can't just have an interest in the machine, like what fascinated me when I grew up programming. He or she must be interested in understanding and solving actual problems for people and businesses, and be able to join the dots back to the underlying technology. The programmer has to care about how stuff is built and sold, adopted, marketed and used, end-to-end. Market demand for just hardcore programmers just isn't going to grow at the same rate as in the past, when everyone successful was a hardcore programmer. We'll still need a pool such people to maintain the stuff we've built in the past and continue to incrementally enhance the low level devices like cameras, network adapters and drivers, but we won't need an ever-increasing amount of them.